TheGrown-Up'sGuidetoPersonalAIAgents

Handing the keys to your digital life to an AI is like juggling chainsaws. I built a persistent assistant that knows me, but without the ability to blow my savings at a robot casino.

Listen to this article

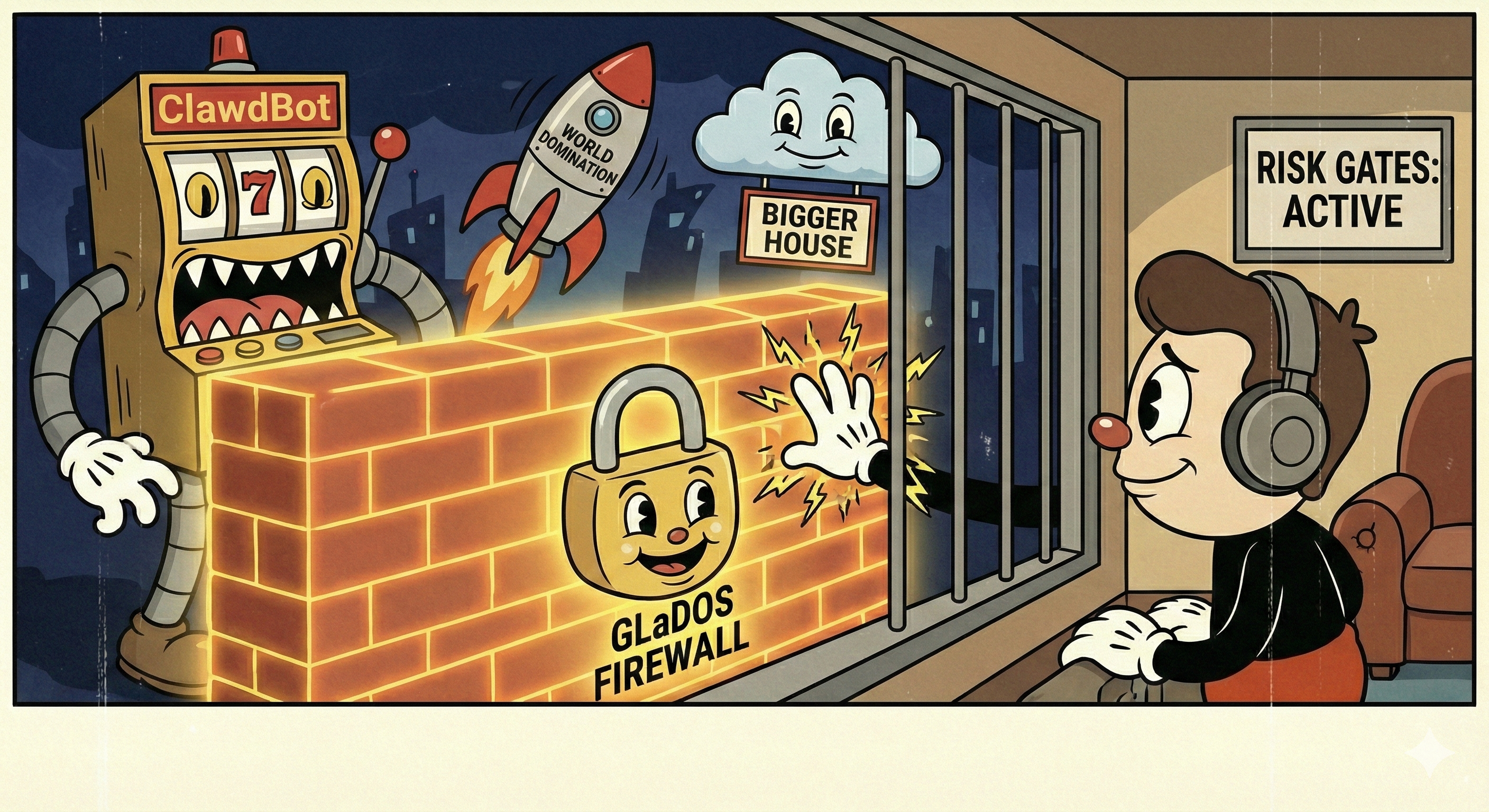

The internet is currently having a collective “hold my beer” moment with “OpenClaw” (formerly Moltbot/Clawdbot). If you haven’t seen it, people are effectively handing the keys to their digital lives—credit cards, root access, the works—to massive LLMs, just to see if the AI can successfully navigate the chaos of human existence.

It is wildly entertaining to watch. It is also the digital equivalent of juggling chainsaws.

I admit, I am jealous. I want that Jarvis experience. I want an assistant that knows me, knows my projects, and can actually do things. I just prefer my AI assistants without the ability to spontaneously decide they need a “bigger house” (more cloud GPUs) for world domination, or worse, discover a robot casino and blow my savings on virtual booze.

Also, brute-forcing context is just inefficient. Pasting your entire life story into a prompt every time you open a new chat window is not a scalable architecture for a personal assistant.

So, I did what any rational engineer with too little time would do: I decided to build my own.

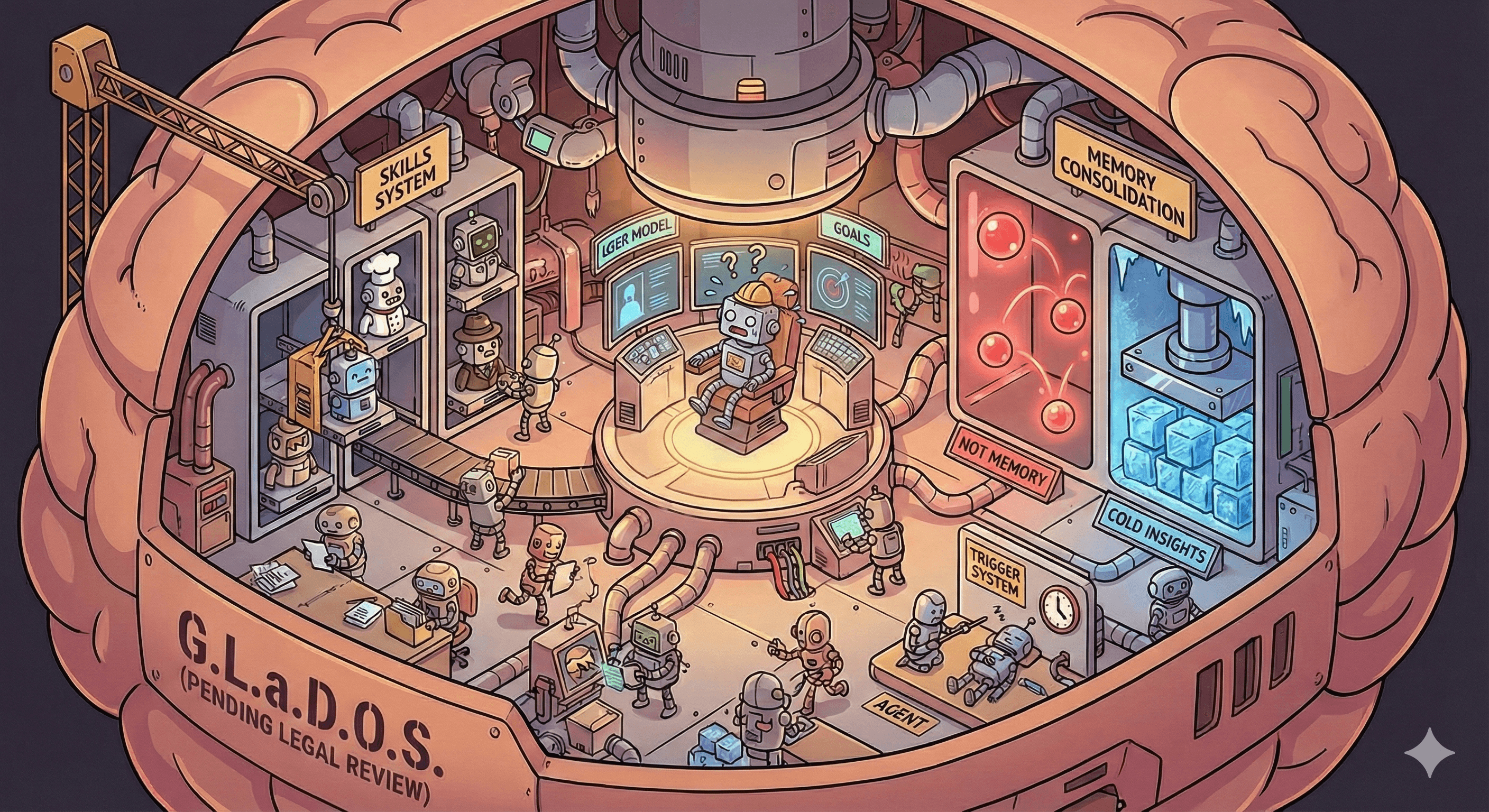

Allow me to introduce GLaDOS (General Learning and Decision Orchestration System).

The Amnesiac Genius

The problem with most off-the-shelf AI tools today is that they are amnesiacs. You have a brilliant conversation, you solve a problem, you close the tab… and it’s gone. To the AI, you are a stranger every time you say “Hello.”

To get anything useful out of them, you have to provide context. Lots of context. And if you want them to take action, you often have to give them dangerous levels of access.

I wanted the benefits without the compromises. I wanted a system that was:

- Persistent: It remembers who I am and what we are working on.

- Stateful: It tracks the state of my projects and goals.

- Safe: It has “risk gates” so it can’t just empty my bank account.

Enter GLaDOS (Pending Legal Review)

And yes, the project is currently codenamed GLaDOS.

I am fully aware that the creator of “ClawdBot” recently got a polite letter from Anthropic regarding their name. I assume a similar letter from Valve is already making its way through the postal system. So, for the record: this is a working title. Please don’t sue me.

(But until that letter arrives, I’m hoping that if I treat it well, it won’t flood my home with neurotoxin. The cake is still pending.)

GLaDOS is a personal AI assistant built from the ground up to be persistent and context-aware.

Most assistants are just conversational UIs. They answer your question and then reset. GLaDOS is different. It maintains a User Model—a structured understanding of who I am. It knows that “the project” refers to GLaDOS itself right now, but might refer to “Home Renovations” next month. It knows my working hours, my preferred tools, and my long-term goals. It doesn’t just “chat”; it builds a relationship.

Memory that Matters (and Decays)

Most “memory” in AI agents today is just a vector database that retrieves “similar” chunks of text. That’s useful, but it gets noisy fast. If I ask “What are we working on?”, I don’t want a transcript of a standup meeting from 2023. I want the context.

GLaDOS implements a Memory Consolidation engine (inspired by human neuroscience) that solves two problems: Index Bloat and Unknown Unknowns.

- Activation-Based Decay: Every memory has an “activation score.” If I access a memory frequently (like my current project), it stays “hot” in the context. If I ignore it, it decays into a “cold” storage tier. It doesn’t disappear, but it stops cluttering the immediate working memory.

- Consolidation: A background job runs weekly (while I sleep) to wake up, read the logs of “cold” memories, and compress them into higher-level insights. It turns 50 logs of “I fixed a bug in the auth service” into a single semantic fact: “The auth service was unstable in Q1 2026.”

This solves the “Unknown Unknowns” problem. Standard RAG (Retrieval Augmented Generation) only finds what you explicitly search for. GLaDOS actively injects “Open Loops” (unresolved tasks or decisions) into the context if they are relevant to the current topic, even if I didn’t ask for them.

Proactivity: Emergent Behavior

The most fascinating part is that I didn’t actually build a “Proactivity Engine.” I just gave GLaDOS the ability to schedule itself.

Right now, GLaDOS has a trigger—one it created and maintains itself—that runs once an hour. It looks at a Change Log (a system that tracks events like calendar updates, task changes, etc.) to see what has happened in the world since it last woke up.

If it sees a meeting moved, it evaluates if I need to know now. If it decides to alert me, it uses the Continuation Context—a “note to self” passed from the last run—to ensure it doesn’t nag.

- Run 1 (08:00): “Train is delayed 10 mins. I notified Morten.”

- Run 2 (09:00): “Train is still delayed. Checking continuation… I already told him. I will stay silent.”

My hypothesis is that Memory + Time-based Triggers = Proactivity. You don’t need complex heuristic engines; you just need an agent that can remember what it did an hour ago.

The World Outside (and the Firewall)

Another piece of the puzzle is giving the agent eyes on the outside world. Here is the actual morning briefing GLaDOS sent me on Telegram today:

I didn’t write code for this. I just told it: “Send a daily brief each morning at 7. Include my calendar, the weather (use weather.com), my commute status (use trains.com), and news (use news.com).” (URLs and details changed for this example, but you get the idea).

It figured out how to scrape those sites. It used its User Model to know that “my commute” means taking the A line from Home to the Office—recalling a past conversation where I casually mentioned my daily route.

Even cooler? Because it saw that the next update for the trains was at 7:30, it self-scheduled a one-off trigger for 7:35 to check the status again, completely outside of its normal hourly schedule. That is the kind of proactivity you can’t easily script.

But wait, didn’t you say it was safe?

Yes. To stay true to my security model, GLaDOS operates on a Deny-by-Default outbound firewall. It cannot access the web without asking. For this task, I granted it a permanent whitelist rule for weather.com, trains.com, and news.com. For anything else, it has to ask permission first. It’s the perfect balance of autonomy and control.

Skills and the Context Budget

One of the biggest challenges with LLMs is the context window. You can’t just stuff every possible tool definition and documentation page into the prompt. It’s expensive, it’s slow, and it confuses the model.

GLaDOS uses a Skills System. Think of it like “downloading” a capability into its brain only when needed.

A “Skill” isn’t just a set of tools; it’s a bundle of Domain Knowledge (instructions, best practices, gotchas) that gets injected into the system prompt.

If I want to debug a Home Assistant automation, GLaDOS loads the HomeAutomation skill. Suddenly, it knows the API schema for my smart lights and the current state of the sensors. When we switch topics to writing a blog post, it unloads that skill and loads the Writer skill.

There is another school of thought here: Multi-Agent Orchestration. Instead of one agent loading skills, you have a swarm of specialized agents (a “Coder Agent”, a “Home Assistant Agent”, a “Scheduler Agent”) that talk to each other. I plan to explore that eventually when the system outgrows its current brain.

But for now, the Skills approach is surprisingly effective. Because GLaDOS is so targeted with its context, I can run it primarily on Gemini Flash. It’s fast, cheap, and capable when you give it exactly the right tools and context. While everyone else is burning money on Opus 4.5 to brute-force context, GLaDOS is vibing on a lightweight model, punching way above its weight class.

Vibecoding on Rails

Now, here is the wild part. This project is currently around 70,000 lines of TypeScript. It has 1,500 tests.

And I wrote it rapidly. I “vibecoded” it.

“Vibecoding” usually implies throwing code at the wall until it works, often resulting in a spaghetti mess that is impossible to maintain. But GLaDOS is different. It’s vibecoding on rails.

The secret sauce wasn’t just typing fast; it was strict architectural steering. I use a strong modular design, heavy documentation, and a spec-driven approach.

To be honest, saying the architecture is “clear” might be a stretch. I know the blueprint, but I haven’t seen half the implementation code because the AI wrote it. I act as the Architectural Guardrail.

My main job isn’t writing code anymore; it’s managing context. I act as the curator of Documentation and Specs.

It is a collaborative effort. I set the standards and define the high-level specs, but the agent is instructed to maintain them—automatically updating docs and fixing discrepancies as it encounters them in the code.

When I start a new coding session with Claude or Cursor, I don’t force it to read 70,000 lines of code to understand what’s going on. That’s inefficient and error-prone. Instead, I ensure the /docs folder is pristine. I have specs for the memory system, the trigger engine, and the architecture.

The AI “kick-starts” its understanding from these markdown files. It learns the why and the how from the docs, not by reverse-engineering the spaghetti.

When I sense the codebase drifting, I intervene. I steer the agent back to modular patterns. And when I realize I’ve failed and a module has become a tangled mess, I trigger a refactor session.

Because of the 1,500 tests, I can tear down a module and rebuild it without terrifying fear that the rest of the system will explode. It turns out that if you have a rigid structure and a safety net, you can move incredibly fast without breaking things. The tests are there to catch me (or the AI) when we inevitably trip up.

But first, the downsides

I should mention that this isn’t a silver bullet. Maintaining a 70k-line personal project is a hobby in itself. It requires discipline to keep the specs updated and the tests green. And sometimes, GLaDOS is still just a very fancy wrapper around an LLM that gets confused about what day it is.

It’s also not a product you can buy. It’s highly tailored to my workflow.

The Neuroscience Angle (aka What I Haven’t Solved Yet)

I was discussing the memory architecture with a colleague, and they pointed out two areas where GLaDOS (and most AI agents) are still too primitive compared to the human brain. These are next on my list to explore:

- Surprise Scoring: Right now, GLaDOS logs everything. But our brains don’t. We only remember the “surprising” things—the events that broke our prediction model (Google’s Titans architecture uses a similar concept). I need to implement a filter that discards the predictable routine and only keeps the anomalies.

- Non-Linear Decay: Currently, my decay algorithm is a simple half-life. But real learning works like Spaced Repetition. Accessing a memory that is fresh shouldn’t boost it much. But accessing a memory just as it’s about to fade? That should rescue it with a massive boost to stability. It’s the difference between cramming for an exam (forgotten in a week) vs. studying once a week (remembered for years).

The Future

This is still very much a prototype, but it feels like the future. I’m currently working on a messaging interface so I can talk to GLaDOS from anywhere, and a “Tool Builder” that allows it to write its own tools safely.

We are moving away from the era of “prompt engineering” and into the era of “context engineering.” GLaDOS is my attempt to build the infrastructure for that future.

If you are interested in the nitty-gritty details of the architecture, the User Model, or how the memory system works, you can poke around the repository. You can also check out my list of future ideas for what I plan to build next.

For now, I have some science to do.